Investigate neural representations of cognitive tasks

Published:

Nowadays, it seems that the word “artificial intelligence” is heavily abused. What I learned in the course Introduction to Artificial Intelligence has nothing to do with computer vision, natural language processing or recommendation systems. Indeed, I would like to use this word to refer to some reinforcement learning things. Receiving lots of AI news form researchers, critics or reviewers everyday, I’m somehow tired of those voices that telling us to focus on causal inference rather than hyper parameter tuning, because most of the AI players do not care about the science at all, that’s why I’m not surprised to see these demagogical AI news in 2018.

Since last year, I have been more interested in those works talking about simulate animals’ cognition. It can be unpredictable if AI has its own thoughts, but before that, AI needs to understand the world. We know that convolutional neural network is inspired by the image processing paradigm of vision cortex. And Mr. Hinton also showed us Capsule Net which is even more close to vision cortex. But in my opinion it’s still peripheral. I don’t mean it is not a necessary part, but it feels like we have strong GPUs but no CPU yet. There should be a part in charge of making decisions.

So I was fascinated to read Task representations in neural networks trained to perform many cognitive tasks. The author said what I want to say:

Humans readily learn to perform many cognitive tasks in a short time. By following verbal instructions such as ‘release the lever only if the second item is not the same as the first’, humans can perform a novel task without any training at all. A cognitive task is typically composed of elementary sensory, cognitive, and motor processes. At the computational level, correctly performing a new task without training requires composing elementary processes that are already learned. This property, called ‘compositionality’, has been proposed as a fundamental principle underlying flexible cognitive control. In a neuronal circuit equipped with a compositional code, a new task might be represented as the algebraic sum of representations of the underlying elementary processes. Indeed, human studies have suggested that the representation of complex cognitive tasks in the lateral prefrontal cortex could be compositional.

Brain can solve different problems with a single network, this is what we see outside the blackbox. What are the mechanisms in the box? Here is the hypothesis:

Twenty cognitive tasks were used to train the neural network.

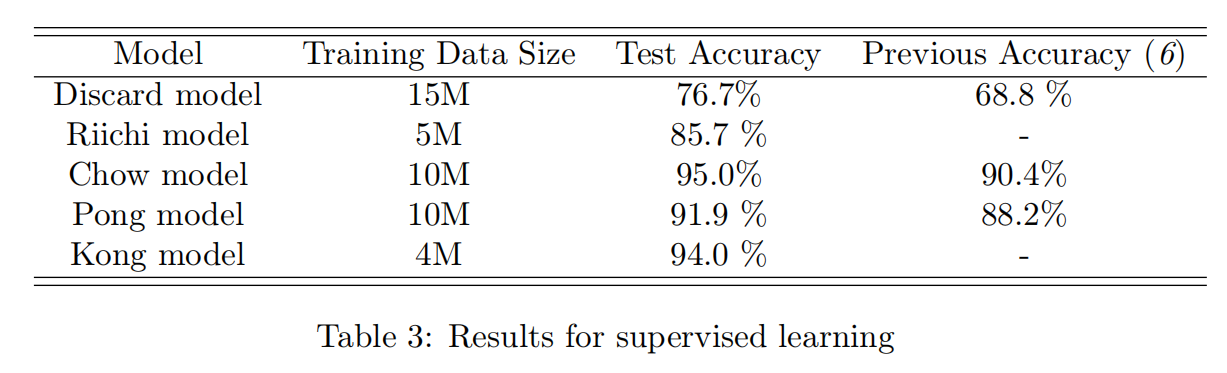

So what’s the contribution? Check their results first. With different setups, 256 networks were tested:

What I learned from these experiments is that activate it in ReLU style. In these experiments, Tanh and Retanh failed to perform well, while ReLU and Softplus did a good job. And Softplus will make the network generate more clusters than ReLU.

Then with a nice network structure, we can check the relationships between neural representations of pairs of tasks. Here the author defined task variance (TV) and fractional task variance (FTV). Task variance measures the variance of activities of a unit across all stimulus conditions in a task. Fractional task variance of a given pair of tasks is difference of the TVs of the two tasks over the sum of the TVs of the two tasks. My understanding of these measures is that TV describes the participation of a unit in a task: if TV is low, the unit is not sensitive in this task and based on that, FTV describes the relationships between neural representations of pairs of tasks, which is the thing we want to know. Five typical relationships were observed by the researchers:

This is impressive and clear enough. Cut part of the connections in the network further suppported these proposed relationships. It is easy to understand, so I don’t think I need to illustrate more.

Another observation is the compositional representation of tasks in state space. It is like what we saw in word vector space back to several years ago. You can find piles of visualizations of word vector. Several experiments were performed to demonstrate this embedding in state space.

So far we explored learning the 20 tasks together. If the network is truly a nice model for neural representation, it is necessary to keep previously learned knowledge when learning new cognitive tasks. The continual learning experiments showed how the network made this possible. But the author also said that:

The FTV distributions in continual-learning networks were substantially more mixed.

Okay, these are all I got from the results. As for the methods used in the research, you can check the Supplementary Information for the details of the 20 tasks. And the code is available at Github.